The Music Computing Lab is involved in a range of research projects at the intersection of Music and Computer Science—some examples are listed below.

A Recipe for Perceived Emotions in Music

Annaliese Micallef Grimaud

Have you ever listened to an unfamiliar piece of music and perceived it as sounding sad or happy? The answer is probably yes.

A common follow-up question is: How does this work?

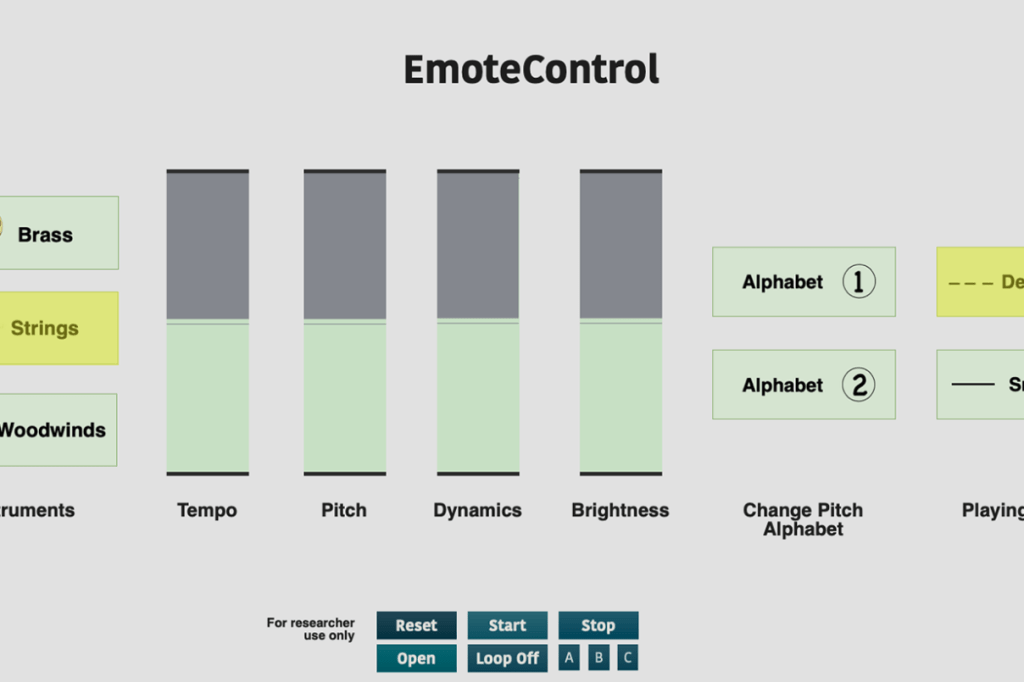

One of my research projects involved looking at how different musical features such as tempo and loudness are used to embed different emotions in a musical piece. This was attained by letting music listeners themselves show me how they think different emotions should sound like in music, by asking them to change instrumental tonal music in real-time via a combination of six or seven musical features using a computer interface I created called EmoteControl. This work identified how different combinations of tempo, pitch, dynamics, brightness, articulation, mode, and later, instrumentation, helped convey different emotional expressions through the music.

Find out how 6 musical features were used to convey 7 emotions (anger, sadness, fear, joy, surprise, calmness, and power) and whether they were successful or not here: https://journals.sagepub.com/doi/10.1177/20592043211061745

Find out how the same 6 musical features plus the option to change the instrument playing were used to convey the same 7 emotions here: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0279605

Recursive Bayesian Networks

Robert Lieck

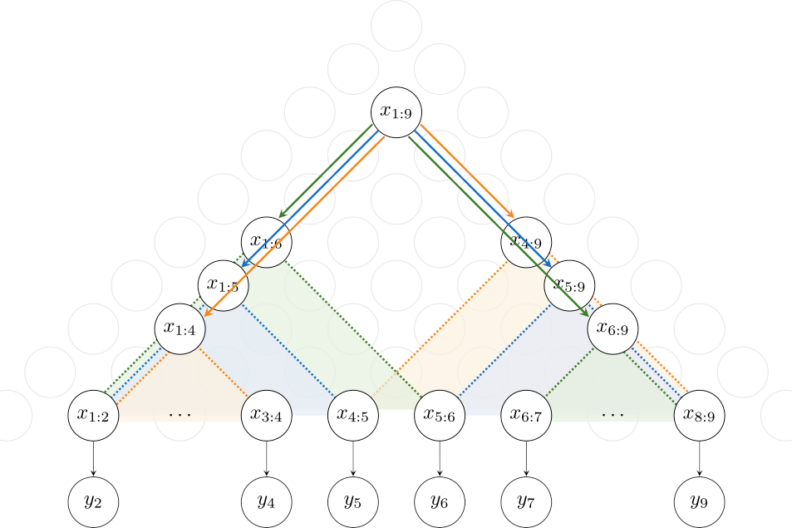

Real-world data – such as sounds, images, music, or videos – are continuous and noisy. Yet they exhibit highly complex dependency structures that are challenging to identify and may be ambiguous. Think of someone making an ambivalent joke that can be interpreted in multiple ways, an image of a complex scene with multiple people interacting in various ways, or a piece of music that evokes a multitude of emotions.

Recursive Bayesian Networks allow for modelling these intricate cases where a variety of different possible structures need to be taken into account. They generalise probabilistic context-free grammars – commonly used for modelling natural language – to allow for continuous, gradual aspects to be represented.

Opening the Red Book

Eamonn Bell

Drawing on science and technology studies (STS), computer science, and digital media studies, this ongoing research project charts the international research and development effort that culminated in the specification of the audio CD format in 1980, often known as the “Red Book.”

Since its introduction, musicians, artists, and other researchers have successfully damaged CDs and tampered with their players, creating new forms of music and digital media practice in their wake. By exposing the limitations and error conditions of a quotidian digital technology, these practices often serve the critique of new digital artifacts.

Whether digging into the technical details of the CD audio standard, exploring mid-90s Web archives, or conducting oral history interviews, it takes an interdisciplinary approach to fully understand these intriguing objects and the practices they afford. This project has been funded by the Irish Research Council (2019–2021) and Durham University (2022–2023).